-

-

Notifications

You must be signed in to change notification settings - Fork 2

Light Detector

Context: During the CIRC competition we must follow a series of red, blue, and infrared lights, while searching for crates labeled with AR tags. For full context consider reading the CIRC Traversal Mission rules or ask a lead for a verbal description.

Problem: We currently have no infrastructure for doing this task fully autonomously. Thus, you will devise a perception pipeline for detecting and publishing the locations of the lights, as well as design a algorithm which will traverse along these waypoints.

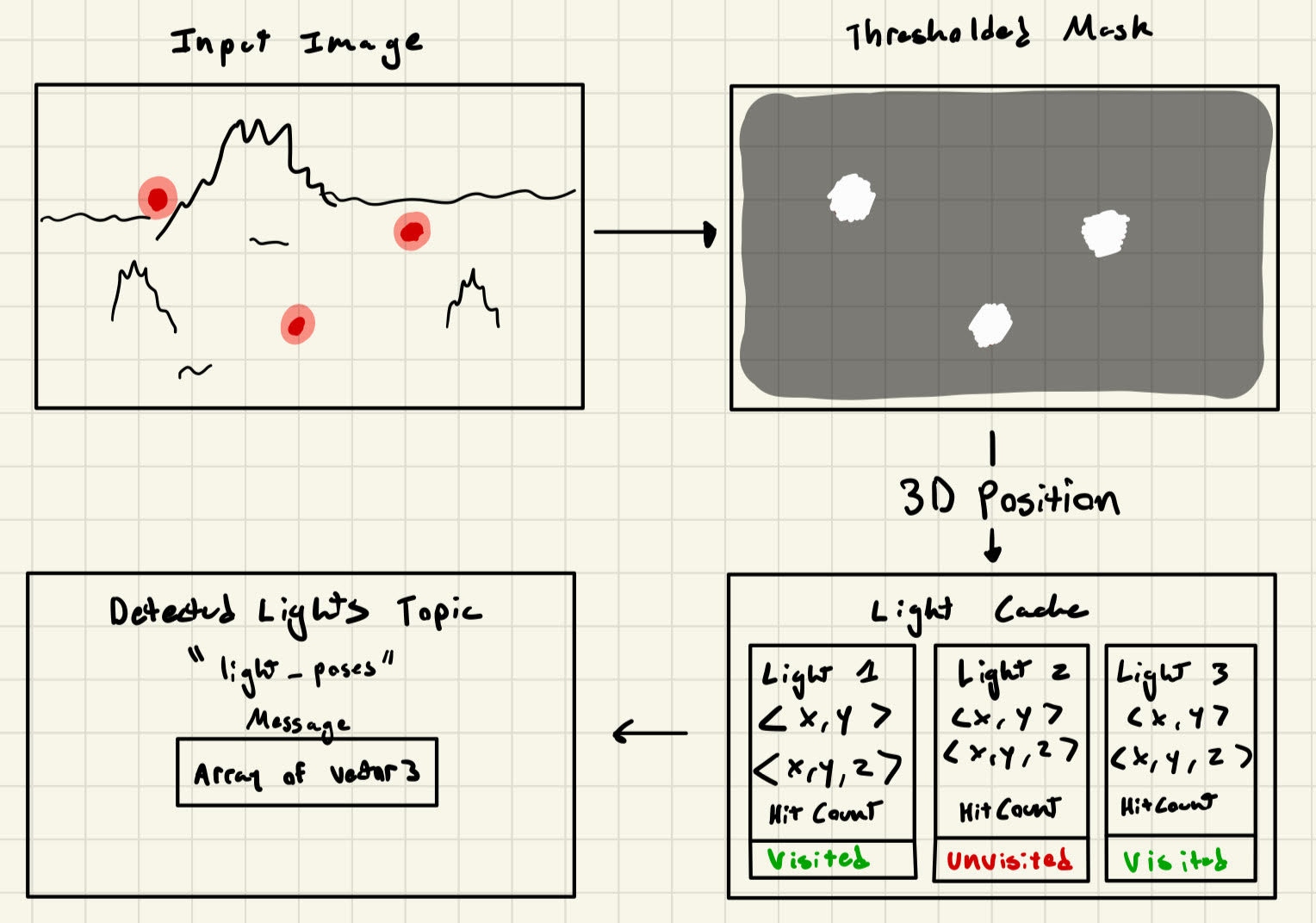

Solution: Use the ZED and perform a thresholding algorithm to locate the lights in image space. Then, using the point cloud generated by the ZED, estimate these objects in 3D space and add them to a cache. Upon each time a given location is detected with a light, increment a hitcounter. Once this counter goes beyond a threshold (will be determined with testing in sim and IRL) publish their locations to topic named "light_poses".

Interface (subject to change)

Node: light_detector

Subscribes: sensor_msgs/PointCloud2 (ZED point cloud)

Publishes: Vector of geometry_msgs/Vector3 (Array of Vector3)

Should Look Something Like this

Vector3[] lights

Rough Steps:

- Use OpenCV Thresholding (over either RGB or HSV) to detect the lights in image space

- Use OpenCV Contours to determine light image space location

- Use the ZED's point cloud to find the corresponding 3D point to the center of the detector light

- Create a light cache with hit counts (probably using

std::unordered_map) - Update hit counts as new lights are detected

- Publish custom light position message to

"light_poses"

Below is a diagram which shows the perception pipeline: