In Project 2, you’ll be required to implement the extended Kalman filter for a 2D landmark-based SLAM system. You’ll receive a ROS package, ekf_slam, which contains skeleton code for the filter. You’ll need to follow the instructions detailed in the last page, step by step

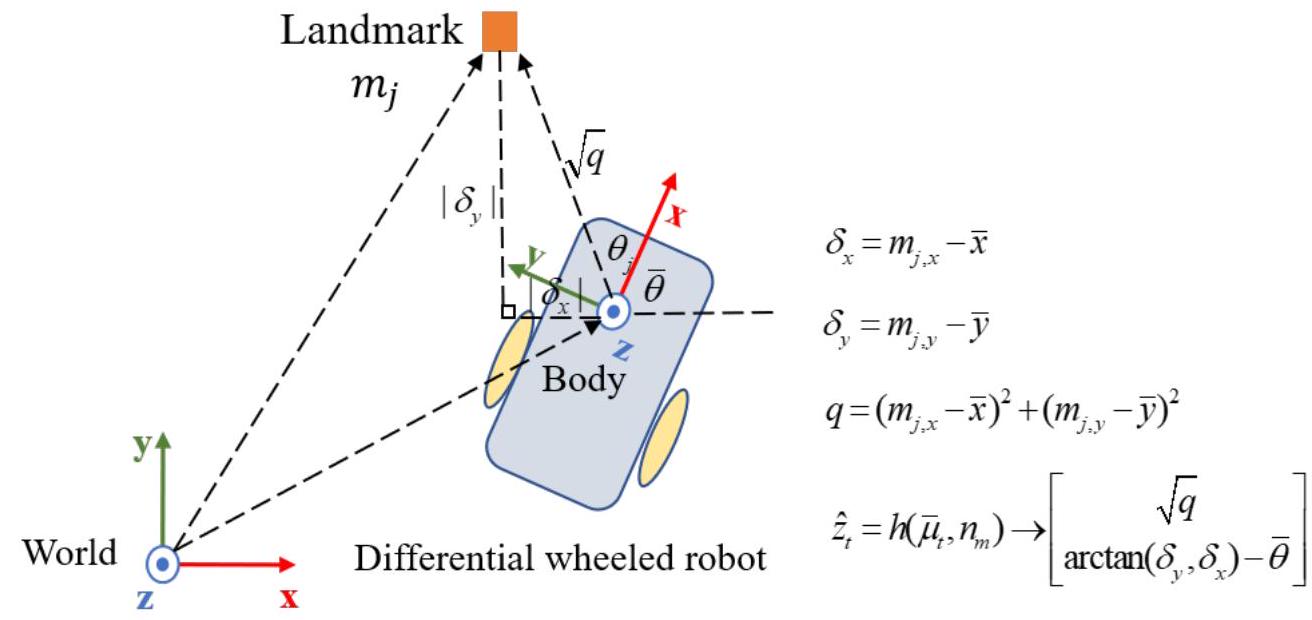

In the prediction phase, we provide two key parameters: the velocity of axis EKFSLAM::run function. Given the differential wheeled robot model, only these two values should be non-zero in theory. We also assume that there are noises in both values, represented by

In the update phase, you’ll be given code for cylinder detection. This code generates a set of 2D coordinates for the centers of detected cylinders in the body frame. These are represented by Eigen::MatrixX2d cylinderPoints. Your task is to associate cylinder observations with your landmarks in the state and to use observed cylinders to update your pose and landmarks. If a new observation occurs, you should augment your state and covariance matrix. Remember to keep the angle between

Every section of the package that requires your implementation is marked by TODO. If you’re unsure of your next steps, searching for TODO globally in the package could help guide you. If you encounter a bug, a useful debugging strategy is to print out as much information as possible.

cd /ws

catkin_make

source devel/setup.bash

roslaunch ekf_slam ekf_slam.launch

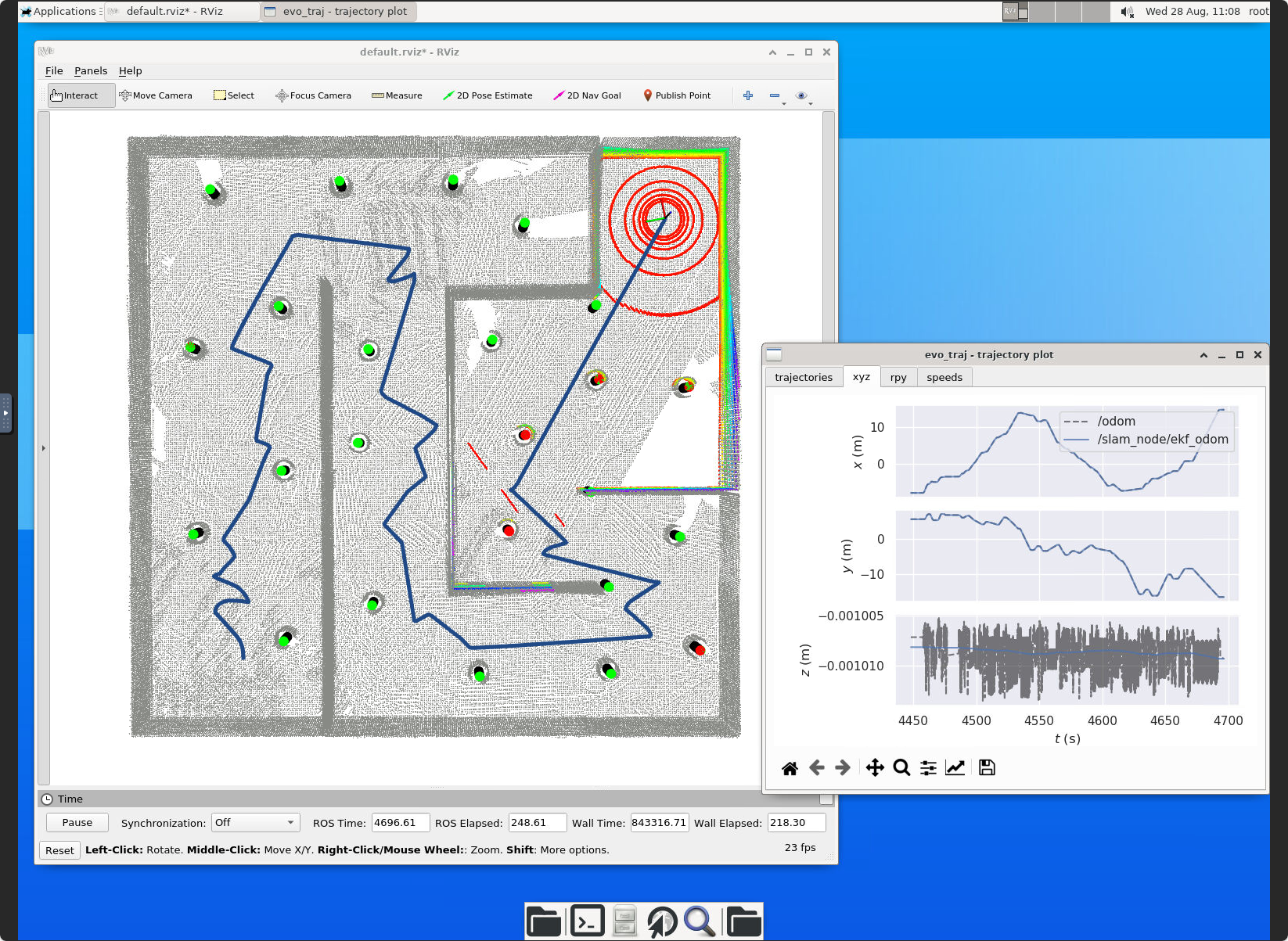

# Press `blankspace` to start the odometry estimationYou can evaluate the performance of your implementation by comparing the estimated odometry with the ground truth. Recommended to use evo to evaluate the performance.

- Record the rosbag data with estimated odometry and ground truth odometry.

rosbag record /slam_node/ekf_odom /odom -O ekf_odom.bag- Run evo to evaluate the performance.

evo_traj bag ekf_odom.bag /slam_node/ekf_odom --ref /odom -a -p

evo_ape bag ekf_odom.bag xxxxxxxxx

evo_rpe bag ekf_odom.bag xxxxxxxxxexample of evo_traj:

- Please title your submission "PROJECT2-YOUR-NAMES.zip". Your submission should include a maximum 2-page report and all necessary files to run your code, a video in 1 minute, rosbag with the estimated odometry and ground truth odometry.

- The report should contain at least a visualization of your final point cloud map and trajectory.

- If your code is similar to others’ or any open-source code on the internet, the score will be 0 unless the sources are properly referenced in your report. Refer to

$^1$ for details. - Excessive white space, overly large images, or redundant descriptions in report will not contribute to a higher score. Simple but efficient code is preferred.

- If any problem, please google first. If unsolved, create a discussion on Canvas.

- If you have no experience of C++ coding, start this project as early as possible. Programming proficiency is not determined by the courses you’ve taken, but rather by ample practice and persistent debugging.

- Submit your code and documents on Canvas before the deadline. Scores will be deducted for late submission.

[1] https://registry.hkust.edu.hk/resource-library/academic-integrity

State:

Robot control:

Motion function: