Is there a linkedin post about the Boston meetup I can share? or should i make one?

+ + + +Feel free to make one! There isn't one yet

+ + + +@Rajesh has joined the channel

+ + + +New to Boston Meetup - Hello all

+ + + +One final question - should we make the dagster unit test job “required” in the ci and how can that be configured?

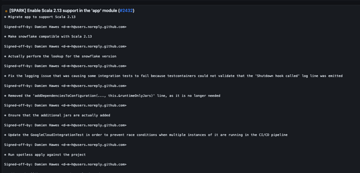

diff --git a/channel/dev-discuss/index.html b/channel/dev-discuss/index.html index 237ae38..26ccc1f 100644 --- a/channel/dev-discuss/index.html +++ b/channel/dev-discuss/index.html @@ -1149,11 +1149,15 @@is it time to support hudi?

@@ -2452,11 +2456,15 @@The full project history is now available at https://openlineage.github.io/slack-archives/. Check it out!

@@ -3357,6 +3365,55 @@ +

+

+

+

+

+

+

+ Feedback requested on the newsletter:

+

+  @@ -4562,12 +4619,12 @@

@@ -4562,12 +4619,12 @@ *Thread Reply:* it’s open source, should we consider testing it out?

+

+  @@ -8169,6 +8226,216 @@

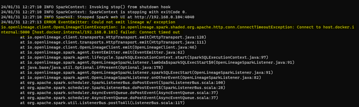

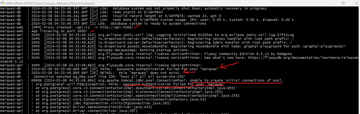

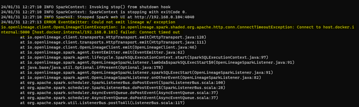

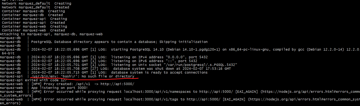

@@ -8169,6 +8226,216 @@ *Thread Reply:* Hi All, I am one of the owners of this repo and working to update this to work with MWAA 2.8.1, with apache-airflow-providers-openlineage==1.4.0. I am facing an issue with my set-up. I am using Redshift SQL as a sample use-case for this and getting an error relating to the Default Extractor. Haven't really looked at this at much detail yet but wondering if you have thoughts? I just updated the env variables to use: AIRFLOWOPENLINEAGETRANSPORT and AIRFLOWOPENLINEAGENAMESPACE and changed operator from PostgresOperator to SQLExecuteQueryOperator.

+[2024-03-07 03:52:55,496] Failed to extract metadata using found extractor <airflow.providers.openlineage.extractors.base.DefaultExtractor object at 0x7fc4aa1e3950> - section/key [openlineage/disabled_for_operators] not found in config task_type=SQLExecuteQueryOperator airflow_dag_id=rs_source_to_staging task_id=task_insert_event_data airflow_run_id=manual__2024-03-07T03:52:11.634313+00:00

+[2024-03-07 03:52:55,498] section/key [openlineage/config_path] not found in config

+[2024-03-07 03:52:55,498] section/key [openlineage/config_path] not found in config

+[2024-03-07 03:52:55,499] Executing:

+ insert into event

+ SELECT eventid, venueid, catid, dateid, eventname, starttime::TIMESTAMP

+ FROM s3_datalake.event;

*Thread Reply:* I'll look into it 🙂

+ + + +*Thread Reply:* @Paul Wilson Villena It looks like a small mistake in the OL, that I'll fix in the next version - we missed adding a callback there, and getting the airflow configuration raises error when disabled_for_operators is not defined in the airflow.cfg file / the env variable. For now it should help to simply add the <a href="https://airflow.apache.org/docs/apache-airflow-providers-openlineage/1.4.0/configurations-ref.html#id1">[openlineage]</a> section to airflow.cfg, and set disabled_for_operators="" , or just export AIRFLOW__OPENLINEAGE__DISABLED_FOR_OPERATORS="" ,

*Thread Reply:* Will be released in the next provider version: https://github.com/apache/airflow/pull/37994

+ + + +*Thread Reply:* Hi @Kacper Muda it seems I need to also set this: Otherwise this error persists:

+section/key [openlineage/config_path] not found in config

+os.environ["AIRFLOW__OPENLINEAGE__CONFIG_PATH"]=""

*Thread Reply:* Yes, sorry for missing that. I fixed in the code and forgot to mention it. If You were to not use AIRFLOW__OPENLINEAGE__TRANSPORT You'd have to set it to empty string as well, as it's missing the same fallback 🙂

*Thread Reply:* I see it too:

+

+ Spotted!

+

+  @@ -9508,12 +9775,12 @@

@@ -9508,12 +9775,12 @@ *Thread Reply:* a moment earlier it makes more context

+

+  @@ -11870,12 +12137,12 @@

@@ -11870,12 +12137,12 @@ execution_date remains the same. If I run a backfill job for yesterday, then delete it and run it again, I get the same ids. I'm trying to understand the rationale behind this choice so we can determine whether it's a bug or a feature. 😉

+

+  @@ -12717,6 +12984,43 @@

@@ -12717,6 +12984,43 @@  +

+

+

+

+

+

+

+ *Thread Reply:*

+

+  @@ -14438,13 +14742,50 @@

@@ -14438,13 +14742,50 @@ *Thread Reply:* @Harel Shein thanks for the suggestion. Lmk if there's a better way to do this, but here's a link to Google's visualizations: https://docs.google.com/forms/d/1j1SyJH0LoRNwNS1oJy0qfnDn_NPOrQw_fMb7qwouVfU/viewanalytics. And a .csv is attached. Would you use this link on the page or link to a spreadsheet instead?

+

+

+

+

+

+

+

+ Thanks for any feedback on the Mailchimp version of the newsletter special issue before it goes out on Monday:

+

+  @@ -15607,12 +15989,12 @@

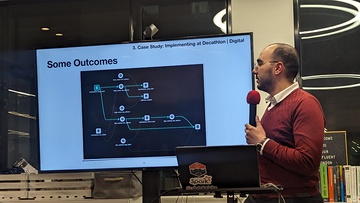

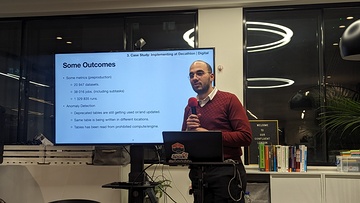

@@ -15607,12 +15989,12 @@ Decathlon showed part of one of their graphs last night

+

+  @@ -15680,12 +16062,12 @@

@@ -15680,12 +16062,12 @@ *Thread Reply:* some metrics too

+

+  @@ -16764,12 +17146,12 @@

@@ -16764,12 +17146,12 @@ *Thread Reply:*

+

+  @@ -18662,6 +19044,166 @@

@@ -18662,6 +19044,166 @@ *Thread Reply:* it got merged 👀

+ + + +*Thread Reply:* amazing feedback on a 10k line PR 😅

+ + + +*Thread Reply:* maybe they have policy that feedback starts from 10k lines

+ + + +*Thread Reply:* it wasn’t enough

+ + + +*Thread Reply:* too big to review, LGTM

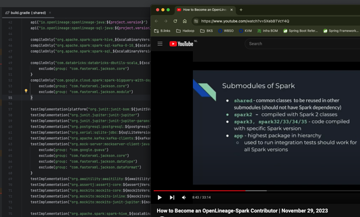

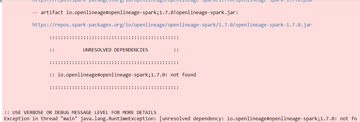

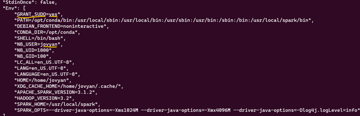

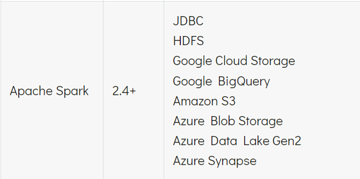

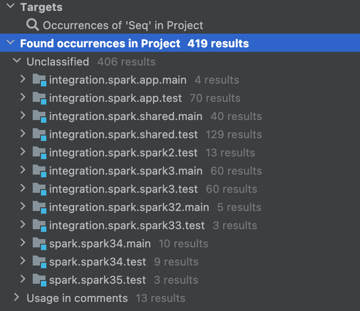

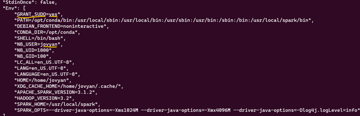

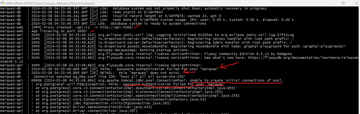

+ + + +I just noticed this. shared should not have a dependency on spark. 👀

+

+  @@ -19635,12 +20177,12 @@

@@ -19635,12 +20177,12 @@ *Thread Reply:* also 🙂

+

+  @@ -22571,12 +23113,12 @@

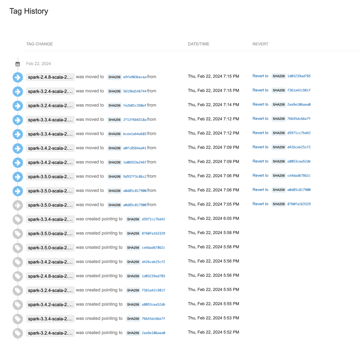

@@ -22571,12 +23113,12 @@ *Thread Reply:* People still love to use 2.4.8 🙂

+

+ *Thread Reply:* not sure it did exactly what we want but probably okay for now

+

+  @@ -23515,6 +24057,58 @@

@@ -23515,6 +24057,58 @@ *Thread Reply:* to me the risk is more to introduce vulnerabilities/backdoors in the OpenLineage released artifact through pushing a cached image that modifies the result of the build.

+ + + +*Thread Reply:* The idea of saving the image signature in the repo is that you can not use a new image in the build without creating a new commit and traceability.

+ + + +gotta skip today meeting. I hope to see you all next week!

+ + + +The meetup I mentioned about OpenLineage/OpenTelemetry: https://x.com/J_/status/1565162740246671360 +I speak in English but other two speakers speak in Hebrew

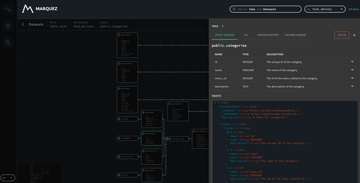

+ +

+

+

+

+

+

+

+ *Thread Reply:* the slides from my part: https://docs.google.com/presentation/d/1BLM2ocs2S64NZLzNaZz5rkrS9lHRvtr9jUIetHdiMbA/edit#slide=id.g11e446d5059_0_1055

+ + + +*Thread Reply:* thanks for sharing that, that otel to ol comparison is going to be very useful for me today :)

+ + + +Could use another pair of eyes on this month's newsletter draft if anyone has time today

+ + +

+

+

+

+

+

+

+ *Thread Reply:* LGTM 🙂

+ + + +Hey, I created new Airflow AIP. It proposes instrumenting Airflow Hooks and Object Storage to collect dataset updates automatically, to allow gathering lineage from PythonOperator and custom operators. +Feel free to comment on Confluence https://cwiki.apache.org/confluence/display/AIRFLOW/AIP-62+Getting+Lineage+from+Hook+Instrumentation +or on Airflow mailing list: https://lists.apache.org/thread/5chxcp0zjcx66d3vs4qlrm8kl6l4s3m2

+ + + +Hey, does anyone want to add anything here (PR that adds AWS MSK IAM transport)? It looks like it's ready to be merged.

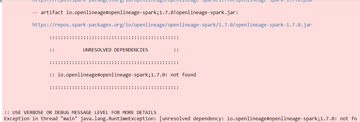

+ + + +did we miss a step in publishing 1.9.1? going https://search.maven.org/remote_content?g=io.openlineage&a=openlineage-spark&v=LATEST|here gives me the 1.8 release

+ + + +*Thread Reply:* oh, this might be related to having 2 scala versions now, because I can see the 1.9.1 artifacts

+ + + +*Thread Reply:* yes

+ + + +*Thread Reply:* we may need to fix the docs then https://openlineage.io/docs/integrations/spark/quickstart/quickstart_databricks

+ + + +*Thread Reply:* another place 🙂

+ + + +*Thread Reply:* https://github.com/OpenLineage/docs/pull/299

+*Thread Reply:* thx :gh_merged:

+ + + +Hi, here's a tentative agenda for next week's TSC (on Wednesday at 9:30 PT):

+ +*Thread Reply:* I thought @Paweł Leszczyński wanted to present?

+ + + +*Thread Reply:* What was the topic? Protobuf or built-in lineage maybe? Or the many docs improvements lately?

+ + + +*Thread Reply:* I think so? https://github.com/OpenLineage/OpenLineage/pull/2272

+ + + +*Thread Reply:* Imagine there are lots of folks who would be interested in a presentation on that

+ + + +*Thread Reply:* I think so too 🙂

+ + + +*Thread Reply:* There two things worth presenting: circuit breaker +/or built-in lineage (once it gets merged).

+ + + +*Thread Reply:* updating the agenda

+ + + +is there a reason why facet objects have _schemaURL property but BaseEvent has schemaURL?

*Thread Reply:* yeah, we use _ to avoid naming conflicts in a facet

*Thread Reply:* same goes for producer

*Thread Reply:* Facets have user defined fields. So all base fields are prefixed

+ + + +*Thread Reply:* Base events do not

+ + + +*Thread Reply:* it should be a made more clear… recently ran into the issue when validating OL events

+ + + +*Thread Reply:* it might be another missing point but we set _producer in BaseFacet:

+def __attrs_post_init__(self) -> None:

+ self._producer = PRODUCER

+but we don’t do that for producer in BaseEvent

*Thread Reply:* is this supposed to be like that?

+ + + +*Thread Reply:* I’m kinda lost 🙂

+ + + +*Thread Reply:* We should set producer in baseevent as well

+ + + +*Thread Reply:* The idea is the base event might be produced by the spark integration but the facet might be produced by iceberg library

+ + + +*Thread Reply:* > The idea is the base event might be produced by the spark integration but the facet might be produced by iceberg library

+right, it doesn’t require adding _ , it just helps in making the difference

and also this reason too: +> Facets have user defined fields. So all base fields are prefixed +> Base events do not

+ + + +*Thread Reply:* Since users can create custom facets with whatever fields we just tell Them that “_**” is reserved.

+ + + +*Thread Reply:* So the underscore prefix is a mechanism specific to facets

+ + + +*Thread Reply:* 👍

+ + + +*Thread Reply:* last question:

+we don’t want to block users from setting their own _producerfield? it seems the only way now is to use openlineage.client.facet.set_producer method to override default, you can’t just do RunEvent(…, _producer='my_own')

*Thread Reply:* The idea is the producer identifies the code that generates the metadata. So you set it once and all the facets you generate have the same

+ + + +*Thread Reply:* mhm, probably you don’t need to use several producers (at least) per Python module

+ + + +*Thread Reply:* In airflow each provider should have its own for the facets they produce

+ + + +*Thread Reply:* just searched for set_producer in current docs - no results 😨

*Thread Reply:* a number of things will get to the right track after I’m done with generating code 🙂

+ + + +*Thread Reply:* Thanks for looking into that. If you can fix the doc by adding a paragraph about that, that would be helpful

+ + + +*Thread Reply:* I can create an issue at least 😂

+ + + +*Thread Reply:* there you go: +https://github.com/OpenLineage/docs/issues/300 +if I missed something please comment

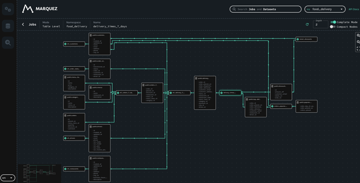

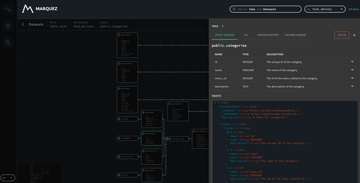

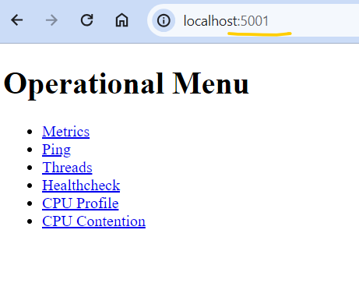

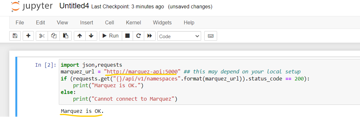

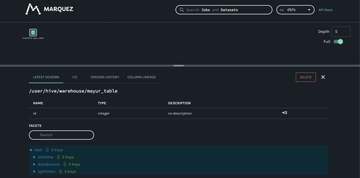

+I feel like our getting started with openlineage page is mostly a getting started with Marquez page. but I'm also not sure what should be there otherwise.

+*Thread Reply:* https://openlineage.io/docs/guides/spark ?

+*Thread Reply:* Unfortunately it's probably not that "quick" given the setup required..

+ + + +*Thread Reply:* Maybe better? https://openlineage.io/docs/integrations/spark/quickstart/quickstart_local

+*Thread Reply:* yeah, that's where I was struggling as well. should our quickstart be platform specific? that also feels strange.

+ + + +Quick question, for the spark.openlineage.facets.disabled property, why do we need to include [;] in the value? Why can't we use , to act as the delimiter? Why do we need [ and ] to enclose the string?

*Thread Reply:* There was some concrete reason AFAIK right @Paweł Leszczyński?

+ + + +*Thread Reply:* We do have a logic that converts Spark conf entries to OpenLineageYaml without a need to understand its content. I think [] was added for this reason to know that Spark conf entry has to be translated into an array.

Initially disabled facets were just separated by ; . Why not a comma? I don't remember if there was any problem with this.

https://github.com/OpenLineage/OpenLineage/pull/1271/files -> this PR introduced it

+ +https://github.com/OpenLineage/OpenLineage/blob/1.9.1/integration/spark/app/src/main/java/io/openlineage/spark/agent/ArgumentParser.java#L152 -> this code check if spark conf value is of array type

+Hi team, do we have any proposal or previous discussion of Trino OpenLineage integration?

+ + + +*Thread Reply:* There is old third-party integration: https://github.com/takezoe/trino-openlineage

+ +It has right idea to use EventListener, but I can't vouch if it works

+ + + +*Thread Reply:* Thanks. We are investigating the integration in our org. It will be a good start point 🙂

+ + + +*Thread Reply:* I think the ideal solution would be to use EventListener. So far we only have very basic integration in Airflow's TrinoOperator

+ + + +*Thread Reply:* The only thing I haven't really checked out what are real possibilities for EventListener in terms of catalog details discovery, e.g. what's database connection for the catalog.

+ + + +*Thread Reply:* Thanks for calling out this. We will evaluate and post some observation in the thread.

+ + + +*Thread Reply:* Thanks Peter +Hey Maciej/Jakub +Could you please share the process to follow in terms of contributing a Trino open lineage integration. (Design doc and issue ?)

+ +There was an issue for trino integration but it was closed recently. +https://github.com/OpenLineage/OpenLineage/issues/164

+*Thread Reply:* It would be great to see design doc and maybe some POC if possible. I've reopened the issue for you.

+ +If you get agreement around the design I don't think there are more formal steps needed, but maybe @Julien Le Dem has other idea

+ + + +*Thread Reply:* Trino has their plugins directory btw: +https://github.com/trinodb/trino/tree/master/plugin +including event listeners like: https://github.com/trinodb/trino/tree/master/plugin/trino-mysql-event-listener

+ + + +*Thread Reply:* Thanks Maciej and Jakub +Yes the integration will be done with Trino’s event listener framework that has details around query, source and destination dataset details etc.

+ +> It would be great to see design doc and maybe some POC if possible. I’ve reopened the issue for you. +Thanks for re-opening the issue. We will add the design doc and POC to the issue.

+ + + +*Thread Reply:* I agree with @Maciej Obuchowski, a quick design doc followed by a POC would be great. +The integration could either live in OpenLineage or Trino but that can be discussed after the POC.

+ + + +*Thread Reply:* (obviously, adding it to the trino repo would require aproval from the trino community)

+ + + +*Thread Reply:* Gentleman, we are also actively looking into this topic with the same repo from @takezoe as our base, I have submitted a PR to revive this project - it does work, the POC is there in a form of docker-compose.yaml deployment 🙂 some obvious things are missing for now (like kafka output instead of api) but I think it's a good starting point and it's compatible with latest trino and OL

+*Thread Reply:* Thanks for put the foundation for the implementation. Base on it, I feel @Alok would still participate and make contribute to it. How about create a design doc and list all of the possible TBDs as @Julien Le Dem suggested.

+ + + +*Thread Reply:* Adding @takezoe to this thread. Thanks for your work on a Trino integration and welcome!

+ + + +*Thread Reply:* throwing the CFP for the Trino conference here in case any one of the contributors want to present there https://sessionize.com/trino-fest-2024

+*Thread Reply:* I'm also very happy to help with an idea for an abstract

+ + + +*Thread Reply:* Hey Harel +Just FYI we are already engaged with Trino community to have a talk around Trino open lineage integration and have submitted an Abstract for review.

+ + + +*Thread Reply:* once you release the integration, please add a reference about it to OpenLineage docs! +https://github.com/OpenLineage/docs

+*Thread Reply:* I think it's ready for review https://github.com/trinodb/trino/pull/21265 just with API sink integration, additional features can be added at @Alok's convenience as next PRs

+Hey, there’s discrepancy between

disabled option)*Thread Reply:* I believe we should not extract or emit any open lineage events if this option is used

+ + + +*Thread Reply:* I'm for option 2, don't send any event from task

+ + + +*Thread Reply:* @Jakub Dardziński do you see any use case for not extracting metadata extraction but still emitting events?

+ + + +*Thread Reply:* The use case AFAIK was old SnowflakeOperator bug, we wanted to disable the collection there, since it zombified the task. The events being emitted still gave information about status of the task as well as non-dataset related metadata

+ + + +*Thread Reply:* but I think it's less relevant now

+ + + +*Thread Reply:* ^ this and you might want to have information about task execution because OL is a backend for some task-tracking system

+ + + +*Thread Reply:* Hm, I believe users don't expect us to spend time processing/extracting OL events if this configuration is used. It's the documented behaviour

+ + + +*Thread Reply:* the question is if we should change docs or behaviour

+ + + +*Thread Reply:* I believe the latter

+ + + +*Thread Reply:* +1 behaviour

+ + + +Hi, here's the

*Thread Reply:* Looks like a great agenda! Left a couple of comments

+ + + +*Thread Reply:* @Michael Robinson will you be able to facilitate or do you need help?

+ + + +*Thread Reply:* I'm also missing from the committer list, but can't comment on slides 🙂

+ + + +*Thread Reply:* Sorry about that @Kacper Muda. Gave you access just now

+ + + +*Thread Reply:* We probably need to add you to lists posted elsewhere... I'll check

+ + + +*Thread Reply:* No worries, thanks 🙂 !

+ + + +https://github.com/open-metadata/OpenMetadata/pull/15317 👀

+ + + +*Thread Reply:* this is awesome

+ + + +*Thread Reply:* it looks like they use temporary deployments to test...

+ + + +*Thread Reply:* yeah the GitHub history is wild

+ + + +Hi, I'm at the conference hotel and my earbuds won't pair with my new mac for some reason. Does the agenda look good? Want to send out the reminders soon. I'll add the OpenMetadata news!

+ + + +*Thread Reply:* I think we can also add the Datahub PR?

+ + + +*Thread Reply:* @Paweł Leszczyński prefers to present only the circuit breakers

+ + + +*Thread Reply:* https://github.com/datahub-project/datahub/pull/9870/files

+ + + +*Thread Reply:* This one?

+ + + +*Thread Reply:* yes!

+ + + +It's been a while since we've updated the twitter profile. Current description: "A standard api for collecting Data lineage and Metadata at runtime." What would you think of using our website's tagline: "An open framework for data lineage collection and analysis." Other ideas?

+ + + +can someone grant me write access to our forked sqlparser-rs repo?

*Thread Reply:* @Julien Le Dem maybe?

+ + + +*Thread Reply:* I should probably add the committer group to it

+ + + +*Thread Reply:* I have made the committer group maintainer on this repo

+ + + +https://github.com/OpenLineage/OpenLineage/pull/2514 +small but mighty 😉

+Regarding the approved release, based on the additions it seems to me like we should make it a minor release (so 1.10.0). Any objections? Changes are here: https://github.com/OpenLineage/OpenLineage/compare/1.9.1...HEAD

+ + + +We encountered a case of a START event, exceeding 2MB in Airflow. This was traced back to an operator with unusually long arguments and attributes. Further investigation revealed that our Airflow events contain redundant data across different facets, leading to unnecessary bloating of event sizes (those long attributes and args were attached three times to a single event). I proposed to remove some redundant facets and to refine the operator's attributes inclusion logic within AirflowRunFacet. I am not sure how breaking is this change, but some systems might depend on the current setup. Suggesting an immediate removal might not be the best approach, and i'd like to know your thoughts. (A similar problem exists within the Airflow provider.) +CC @Maciej Obuchowski @Willy Lulciuc @Jakub Dardziński

+ +https://github.com/OpenLineage/OpenLineage/pull/2509

+As mentioned during yesterday's TSC, we can't get insight into DataHub's integration from the PR description in their repo. And it's a very big PR. Does anyone have any intel? PR is here: https://github.com/datahub-project/datahub/pull/9870

+Changelog PR for 1.10 is RFR: https://github.com/OpenLineage/OpenLineage/pull/2516

+@Julien Le Dem @Paweł Leszczyński Release is failing in the Java client job due to (I think) the version of spotless: +```Could not resolve com.diffplug.spotless:spotlessplugingradle:6.21.0. + Required by: + project : > com.diffplug.spotless:com.diffplug.spotless.gradle.plugin:6.21.0

+ +++ + + +No matching variant of com.diffplug.spotless:spotlessplugingradle:6.21.0 was found. The consumer was configured to find a library for use during runtime, compatible with Java 8, packaged as a jar, and its dependencies declared externally, as well as attribute 'org.gradle.plugin.api-version' with value '8.4'```

+

*Thread Reply:* @Michael Robinson https://github.com/OpenLineage/OpenLineage/pull/2517

+fix to broken main: +https://github.com/OpenLineage/OpenLineage/pull/2518

+*Thread Reply:* Thanks, just tried again

+ + + +*Thread Reply:* ? +it needs approve and merge 😛

+ + + +*Thread Reply:* Oh oops disregard

+ + + +*Thread Reply:* different PR

+ + + +*Thread Reply:* 👍

+ + + +There's an issue with the Flink job on CI:

+** What went wrong:

+Could not determine the dependencies of task ':shadowJar'.

+> Could not resolve all dependencies for configuration ':runtimeClasspath'.

+ > Could not find io.**********************:**********************_sql_java:1.10.1.

+ Searched in the following locations:

+ - <https://repo.maven.apache.org/maven2/io/**********************/**********************-sql-java/1.10.1/**********************-sql-java-1.10.1.pom>

+ - <https://packages.confluent.io/maven/io/**********************/**********************-sql-java/1.10.1/**********************-sql-java-1.10.1.pom>

+ - file:/home/circleci/.m2/repository/io/**********************/**********************-sql-java/1.10.1/**********************-sql-java-1.10.1.pom

+ Required by:

+ project : > project :shared

+ project : > project :flink115

+ project : > project :flink117

+ project : > project :flink118

*Thread Reply:* https://github.com/OpenLineage/OpenLineage/pull/2521

+*Thread Reply:* @Jakub Dardziński still awake? 🙂

+ + + +*Thread Reply:* it’s just approval bot

+ + + +*Thread Reply:* created issue on how to avoid those in the future https://github.com/OpenLineage/OpenLineage/issues/2522

+ + + +*Thread Reply:* https://app.circleci.com/jobs/github/OpenLineage/OpenLineage/188526 I lack emojis on this server to fully express my emotions

+ + + +*Thread Reply:* https://openlineage.slack.com/archives/C065PQ4TL8K/p1710454645059659 +you might have missed that

+*Thread Reply:* merge -> rebase -> problem gone

+ + + +*Thread Reply:* PR to update the changelog is RFR @Jakub Dardziński @Maciej Obuchowski: https://github.com/OpenLineage/OpenLineage/pull/2526

+https://github.com/OpenLineage/OpenLineage/pull/2520 +It’s a long-awaited PR - feel free to comment!

+https://github.com/OpenLineage/OpenLineage/blob/main/spec/facets/ParentRunFacet.json#L20

+here the format is uuid

+however if you follow logic for parent id in current dbt integration you might discover that parent run facet has assigned value of DAG’s run_id (which is not uuid)

@Julien Le Dem, what has higher priority? I think lots of people are using dbt-ol wrapper with current lineage_parent_id macro

*Thread Reply:* It is a uuid because it should be the id of an OL run

+ + + +where can I find who has write access to OL repo?

+ + + +*Thread Reply:* Settings > Collaborators and teams

+ + + +*Thread Reply:* thanks Michael, seems like I don’t have enough permissions to see that

+ + + +Sorry, I have a dr appointment today and won’t join the meeting

+ + + +*Thread Reply:* I gotta skip too. Maciej and Pawel are at the Kafka Summit

+ + + +*Thread Reply:* I hope you’re fine!

+ + + +*Thread Reply:* I am fine thank you 🙂

+ + + +*Thread Reply:* just a visit

+ + + +Should we cancel the sync today?

+ + + +looking at XTable today, any thoughts on how we can collaborate with them?

+.png) +

+

+

+

+

+

+

+ *Thread Reply:* @Julien Le Dem @Willy Lulciuc this reminds me of some ideas we had a few years ago.. :)

+ + + +*Thread Reply:* hmm.. ok. maybe not that relevant for us, at first I thought this was an abstraction for read/write on top of Iceberg/Hudi/Delta.. but I think this is more of a data sync appliance. would still be relevant for linking together synced datasets (but I don't think it's that important now)

+ + + +*Thread Reply:* From the introduction https://www.confluent.io/blog/introducing-tableflow/, looks like they are using Flink for both data ingestion and compaction. It means we should at least consider to support hudi source and sink for flink lineage 🙂

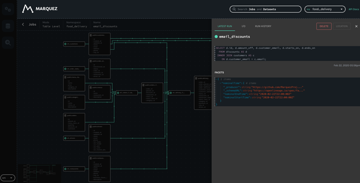

+ +

+

+

+

+

+

+

+ A key growth metric trending in the right direction:

+ + +

+

+

+

+

+

+

+ Eyes on this PR to add OpenMetadata to the Ecosystem page would be appreciated: https://github.com/OpenLineage/docs/pull/303. TIA! @Mariusz Górski

+I really want to improve this page in the docs, anyone wants to work with me on that?

+*Thread Reply:* perhaps also make this part of the PR process, so when we add support for something, we remember to update the docs

+ + + +*Thread Reply:* I free up next week and would love to chat… obviously, time permitting but the page needs some love ❤️

+ + + +*Thread Reply:* I can verify the information once you have some PR 🙂

+ + + +RFR: a PR to add DataHub to the Ecosystem page https://github.com/OpenLineage/docs/pull/304

+*Thread Reply:* The description comes from the very brief README in DataHub's GH repo and a glance at the code. No other documentation or resources appear to be available.

+ + + +*Thread Reply:* @Tamás Németh

+ + + +Dagster is launching column-lineage support for dbt using the sqlglot parser https://github.com/dagster-io/dagster/pull/20407

+*Thread Reply:* I kinda like their approach to use post-hooks in order to enable column-level lineage so that custom macro collects information about columns, logs it and they parse the log after the execution

*Thread Reply:* it doesn’t force dbt docs generate step that some might not want to use

*Thread Reply:* but at the same time reuses DBT adapter to make additional calls to retrieve missing metadata

+ + + +@Paweł Leszczyński interesting project I came across over the weekend: https://github.com/HamaWhiteGG/flink-sql-lineage

+*Thread Reply:* Wow, this is something we would love to have (flink SQL support). It's great to know that people around the globe are working on the same thing and heading same direction. Great finding @Willy Lulciuc. Thanks for sharing!

+ + + +*Thread Reply:* On Kafka Summit I've talked with Timo Walther from Flink SQL team and he proposed alternative approach.

+ +Flink SQL has stable (across releases) CompiledPlan JSON text representation that could be parsed, and has all the necessary info - as this is used for serializing actual execution plan both ways.

*Thread Reply:* As Flink SQL will convert to transformations before execution, technical speaking our existing solution has already be able to create linage info for Flink SQL apps (not including column lineage and table schemas (that can be inferred within flink table environment)). I will create Flink SQL job for e2e testing purpose.

+ + + +*Thread Reply:* I am also working on Flink side for table lineage. Hopefully, new lineage features can be released in flink 1.20.

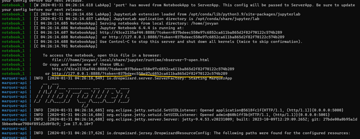

+ + + +Sessions for this year's Data+AI Summit have been published. A search didn't turn up anything related to lineage, but did you know Julien and Willy's talk at last year's summit has received 4k+ views? 👀

+ +

+

+

+

+

+

+

+ *Thread Reply:* seems like our talk was not accepted, but I can see 9 sessions on unity catalog 😕

+ + + +finally merged 🙂

+ + + +pawel-big-lebowski commented on Nov 21, 2023

+whoa

I’ll miss the sync today (on the way to data council)

+ + + +*Thread Reply:* have fun at the conference!

+ + + +OK @Maciej Obuchowski - 1 job has many stages; 1 stage has many tasks. Transitively, this means that 1 job has many tasks.

+ + + +*Thread Reply:* batch or streaming one? 🙂

+ + + +*Thread Reply:* Doesn't matter. It's the same concept.

+ + + +Also @Paweł Leszczyński, seem Spark metrics has this:

+ +local-1711474020860.driver.LiveListenerBus.listenerProcessingTime.io.openlineage.spark.agent.OpenLineageSparkListener

+ count = 12

+ mean rate = 1.19 calls/second

+ 1-minute rate = 1.03 calls/second

+ 5-minute rate = 1.01 calls/second

+ 15-minute rate = 1.00 calls/second

+ min = 0.00 milliseconds

+ max = 1985.48 milliseconds

+ mean = 226.81 milliseconds

+ stddev = 549.12 milliseconds

+ median = 4.93 milliseconds

+ 75% <= 53.64 milliseconds

+ 95% <= 1985.48 milliseconds

+ 98% <= 1985.48 milliseconds

+ 99% <= 1985.48 milliseconds

+ 99.9% <= 1985.48 milliseconds

Do you think Bipan's team could potentially benefit significantly from upgrading to the latest version of openlineage-spark? https://openlineage.slack.com/archives/C01CK9T7HKR/p1711483070147019

+*Thread Reply:* @Paweł Leszczyński wdyt?

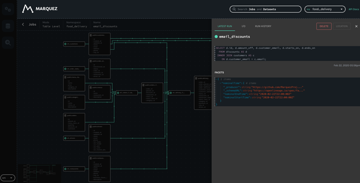

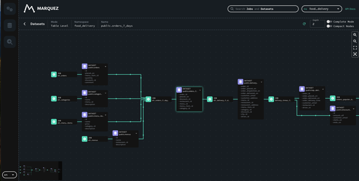

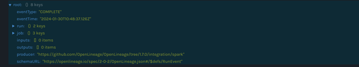

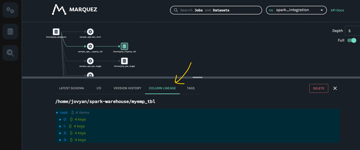

+ + + +*Thread Reply:* I think the issue here is that marquez is not able to properly visualize parent run events that Maciej has added recently for a Spark application

+ + + +*Thread Reply:* So if they downgraded would they have a graph closer to what they want?

+ + + +*Thread Reply:* I don't see parent run events there?

+ + + +I'm exploring ways to improve the demo gif in the Marquez README. An improved and up-to-date demo gif could also be used elsewhere -- in the Marquez landing pages, for example, and the OL docs. Along with other improvements to the landing pages, I created a new gif that's up to date and higher-resolution, but it's large (~20 MB). +• We could put it on YouTube and link to it, but that would downgrade the user experience in other ways. +• We could host it somewhere else, but that would mean adding another tool to the stack and, depending on file size limits, could cost money. (I can't imagine it would cost but I haven't really looked into this option yet. Regardless of cost, tt seems to have the same drawbacks as YT from a UX perspective.) +• We could have GitHub host it in another repo (for free) in the Marquez or OL orgs. + ◦ It could go in the OL Docs because it's likely we'll want to use it in the docs anyway, but even if we never serve it wouldn't this create issues for local development at a minimum? I opened a PR to do this, which a PR with other improvements is waiting on, but not sure about this approach. + ◦ It could go in the unused Marquez website repo, but there's a good chance we'll forget it's there and remove or archive the repo without moving it first. + ◦ In another repo, or even a new one for stuff like this? +Anyone have an opinion or know of a better option?

+ + + +*Thread Reply:* maybe make it a HTML5 video?

+ + + +*Thread Reply:* https://wp-rocket.me/blog/replacing-animated-gifs-with-html5-video-for-faster-page-speed/

+ +

+

+

+

+

+

+

+ *Thread Reply:* 👀

+ + + +@Julien Le Dem @Harel Shein how did Data Council panel and talk go?

+ + + +*Thread Reply:* Was just composing the message below :)

+ + + +Some great discussions here at data council, the panel was really great and we can definitely feel energy around OpenLineage continuing to build up! 🚀 +Thanks @Julien Le Dem for organizing and shoutout to @Ernie Ostic @Sheeri Cabral (Collibra) @Eric Veleker for taking the time and coming down here and keeping pushing more and building the community! ❤️

+ + + +*Thread Reply:* @Harel Shein did anyone take pictures?

+ + + +*Thread Reply:* there should be plenty of pictures from the conference organizers, we'll ask for some

+ + + +*Thread Reply:* Did a search and didn't see anything

+ + + +*Thread Reply:* Speaker dinner the night before: https://www.linkedin.com/posts/datacouncil-aidatacouncil-ugcPost-7178852429705224193-De46?utmsource=share&utmmedium=memberios|https://www.linkedin.com/posts/datacouncil-aidatacouncil-ugcPost-7178852429705224193-De46?utmsource=share&utmmedium=memberios

+ +

+

+

+

+

+

+

+ *Thread Reply:* Ahah. Same picture

+ + + +*Thread Reply:* haha. Julien and Ernie look great while I'm explaining how to land an airplane 🛬

+ + + +*Thread Reply:* Great pic!

+ + + +*Thread Reply:* awesome! just in time for the newsletter 🙂

+ + + +*Thread Reply:* Thank you for thinking of us. Onwards and upwards.

+ + + +I just find the naming conventions for hive/iceberg/hudi are not listed in the doc https://openlineage.io/docs/spec/naming/. Shall we further standardize them? Any suggestions?

+*Thread Reply:* Yes. This also came up in a conversation with one of the maintainers of dbt-core, we can also pick up on a proposal to extend the naming conventions markdown to something a bit more scalable.

+ + + +*Thread Reply:* What you think about this proposal? +https://github.com/OpenLineage/OpenLineage/pull/1702

+*Thread Reply:* Thanks for sharing the info. Will take a deeper look later today.

+ + + +*Thread Reply:* I think this is similar topic to resource naming in ODD, might be worth to take a look for inspiration: https://github.com/opendatadiscovery/oddrn-generator

+*Thread Reply:* the thing is we need to have language-agnostic way of defining those naming conventions and be able to generate code for them, similar to facets spec

+ + + +*Thread Reply:* could be also an idea to have micro rest api embedded in each client, so managing naming convention would be stored there and each client (python/java) could run it as a subprocess 🤔

+ + + +*Thread Reply:* we can also just write it in Rust, @Maciej Obuchowski 😁

+ + + +*Thread Reply:* no real changes/additions, but starting to organize the doc for now: https://github.com/OpenLineage/OpenLineage/pull/2554

+ + + +@Maciej Obuchowski we also heard some good things about the sqlglot parser. have you looked at it recently?

+*Thread Reply:* I love the fact that our parser is in type safe language :)

+ + + +*Thread Reply:* does it matter after all when it comes to parsing SQL? +it might be worth to run some comparisons but it may turn out that sqlglot misses most of Snowflake dialect that we currently support

+ + + +*Thread Reply:* We'd miss on Java side parsing as well

+ + + +*Thread Reply:* very importantly this ^

+ + + +*Thread Reply:* That’s important. Yes

+ + + +OpenLineage 1.11.0 release vote is now open: https://openlineage.slack.com/archives/C01CK9T7HKR/p1711980285409389

+Sorry, I’ll be late to the sync

+ + + +forgot to mention, but we have the TSC meeting coming up next week. we should start sourcing topics

+ + + +*Thread Reply:* 1.10 and 1.11 releases +Data Council, Kafka Summit, & Boston meetup shout outs and quick recaps +Datadog poc update or demo?

+ + + +*Thread Reply:* Discussion item about Trino integration next steps?

+ + + +*Thread Reply:* Accenture+Confluent roundtable reminder for sure

+ + + +*Thread Reply:* job to job dependencies discussion item? https://openlineage.slack.com/archives/C065PQ4TL8K/p1712153842519719

+*Thread Reply:* I think it's too early for Datadog update tbh, but I like the job to job discussion. +We can make also bring up the naming library discussion that we talked about yesterday

+ + + +one more thing, if we want we could also apply for a free Datadog account for OpenLineage and Marquez: https://www.datadoghq.com/partner/open-source/

+ +

+

+

+

+

+

+

+ *Thread Reply:* would be nice for tests

+ + + +is there any notion of process dependencies in openlineage? i.e. if I have two airflow tasks that depend on each other, with no dataset in between, can I express that in the openlineage spec?

+ + + +*Thread Reply:* AFAIK no, it doesn't aim to do reflect that +cc @Julien Le Dem

+ + + +*Thread Reply:* It is not in the core spec but this could be represented as a job facet. It is probably in the airflow facet right now but we could add a more generic job dependency facet

+ + + +*Thread Reply:* we do represent hierarchy though - with ParentRunFacet

*Thread Reply:* if we were to add some dependency facet, what would we want to model?

+ +*Thread Reply:* do we also want to model something like Airflow's trigger rules? https://airflow.apache.org/docs/apache-airflow/stable/core-concepts/dags.html#trigger-rules

+ + + +*Thread Reply:* I don't think this is about hierarchy though, right? If I understand @Julian LaNeve correctly, I think it's more #2

+ + + +*Thread Reply:* yeah it's less about hierarchy - definitely more about #2.

+ +assume we have a DAG that looks like this:

+Task A -> Task B -> Task C

+today, OL can capture the full set of dependencies this if we do:

+A -> (dataset 1) -> B -> (ds 2) -> C

+but it's not always the case that you have datasets between everything. my question was moreso around "how can I use OL to capture the relationship between jobs if there are no datasets in between"

*Thread Reply:* I had opened an issue to track this a while ago but we did not get too far in the discussion: https://github.com/OpenLineage/OpenLineage/issues/552

+*Thread Reply:* oh nice - unsurprisingly you were 2 years ahead of me 😆

+ + + +*Thread Reply:* You can track the dependency both at the job level and at the run level.

+At the job level you would do something along the lines of:

+job: { facets: {

+ job_dependencies: {

+ predecessors: [

+ { namespace: , name: }, ...

+ ],

+ successors: [

+ { namespace: , name: }, ...

+ ]

+ }

+}}

*Thread Reply:* At the run level you could track the actual task run dependencies:

+run: { facets: {

+ run_dependencies: {

+ predecessor: [ "{run uuid}", ...],

+ successors: [...],

+ }

+}}

*Thread Reply:* I think the current airflow run facet contains that information in an airflow specific representation: https://github.com/apache/airflow/blob/main/airflow/providers/openlineage/plugins/facets.py

+*Thread Reply:* I think we should have the discussion in the ticket so that it does not get lost in the slack history

+ + + +*Thread Reply:* run: { facets: {

+ run_dependencies: {

+ predecessor: [ "{run uuid}", ...],

+ successors: [...],

+ }

+}}

+I like this format, but would have full run/job identifier as ParentRunFacet

*Thread Reply:* For the trigger rules I wonder if this is too specific to airflow.

+ + + +*Thread Reply:* But if there’s a generic way to capture this, it makes sense

+ + + +Don't forget to register for this! https://events.confluent.io/roundtable-data-lineage/Accenture

+ +

+

+

+

+

+

+

+ This attempt at a SQLAlchemy was basically working, if not perfectly, the last time I played with it: https://github.com/OpenLineage/OpenLineage/pull/2088. What more do I need to do to get it to the point where it can be merged as an "experimental"/"we warned you" integration? I mean, other than make sure it's still working and clean it up? 🙂

+ + + +https://docs.getdbt.com/docs/collaborate/column-level-lineage#sql-parsing

+*Thread Reply:* seems like it’s only for dbt cloud

+ + + +*Thread Reply:* > Column-level lineage relies on SQL parsing. +Was thinking about doing the same thing at some point

+ + + +*Thread Reply:* Basically with dbt we know schemas, so we also can resolve wildcards as well

+ + + +*Thread Reply:* but that requires adding capability for providing known schema into sqlparser

+ + + +*Thread Reply:* that's not very hard to add afaik 🙂

+ + + +*Thread Reply:* not exactly into sqlparser too

+ + + +*Thread Reply:* just our parser

+ + + +*Thread Reply:* yeah, our parser

+ + + +*Thread Reply:* still someone has to add it :D

+ + + +*Thread Reply:* some rust enthusiast probably

+ + + +*Thread Reply:* 👀

+ + + +*Thread Reply:* but also: dbt provides schema info only if you generate catalog.json with generate docs command

+ + + +*Thread Reply:* Right now we have the dbl-ol wrapper anyway, so we can make another dbt docs command on behalf of the user too

+ + + +*Thread Reply:* not sure if running commands on behalf of user is good idea, but denoting in docs that running it increases accuracy of column-level lineage is probably a good idea

+ + + +*Thread Reply:* once we build it

+ + + +*Thread Reply:* of course

+ + + +*Thread Reply:* That depends, what are the side effects of running dbt docs?

+ + + +*Thread Reply:* the other option is similar to dagster's approach - run post-hook macro that prints schema to logs and read the logs with dbt-ol wrapper

+ + + +*Thread Reply:* which again won't work in dbt cloud - there catalog.json seems like the only option

+ + + +*Thread Reply:* > That depends, what are the side effects of running dbt docs? +refreshing someone's documentation? 🙂

+ + + +*Thread Reply:* it would be configurable imho, if someone doesn’t want column level lineage in price of additional step, it’s their choice

+ + + +*Thread Reply:* yup, agreed. I'm sure we can also run dbt docs to a temp directory that we'll delete right after

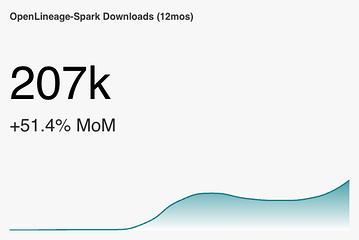

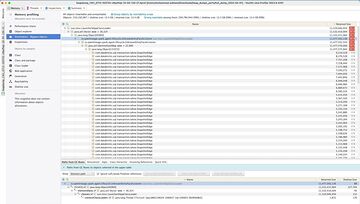

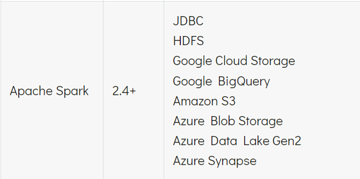

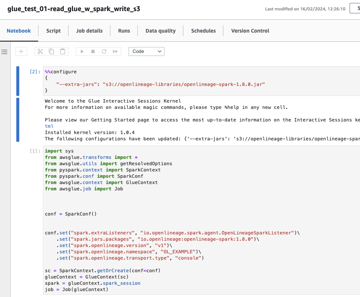

+ + + +Some encouraging stats from Sonatype: these are Spark integration downloads (unique IPs) over the last 12 months

+ +*Thread Reply:* That's an increase of 17560.5%

+ + + +*Thread Reply:* https://github.com/OpenLineage/OpenLineage/releases/tag/1.11.3 +that’s a lot of notes 😮

+ + + +Marquez committers: there's a committer vote open 👀

+ + + +did anyone submit a CFP here? https://sessionize.com/open-source-summit-europe-2024/ +it's a linux foundation conference too

+ +

+

+

+

+

+

+

+ *Thread Reply:* looks like a nice conference

+ + + +*Thread Reply:* too far for me, but might be a train ride for you?

+ + + +*Thread Reply:* yeah, I might submit something 🙂

+ + + +*Thread Reply:* and I think there are actually direct trains to Vienna from Warsaw

+ + + +Hmm @Maciej Obuchowski @Paweł Leszczyński - I see we released 1.11.3, but I don't see the artifacts in central. Are the artifacts blocked?

+ + + +*Thread Reply:* after last release, it took me some 24h to see openlineage-flink artifact published

+ + + +*Thread Reply:* I recall something about the artifacts had to be manually published from the staging area.

+ + + +*Thread Reply:* @Maciej Obuchowski - can you check if the release is stuck in staging?

+ + + +*Thread Reply:* I recall last time it failed because there wasn't a javadoc associated with it

+ + + +*Thread Reply:* Nevermind @Paweł Leszczyński @Maciej Obuchowski - it seems like the search indexes haven't been updated.

+ + + +*Thread Reply:* https://repo.maven.apache.org/maven2/io/openlineage/openlineage-spark_2.13/1.11.3/

+ + + +*Thread Reply:* @Michael Robinson has to manually promote them but it's not instantaneous I believe

+ + + +I'm seeing some really strange behavior with OL Spark, I'm going to give some data to help out, but these are still breadcrumbs unfortunately. 🧵

+ + + +*Thread Reply:* the driver for this job is running for more than 5 hours, but the job actually finished after 20 minutes

+ + + +*Thread Reply:* most the cpu time in those 5 hours are spent in openlineage methods

+ + + +*Thread Reply:* it's also not reproducible 😕

+ + + +*Thread Reply:* but happens "sometimes"

+ + + +*Thread Reply:* DatasetIdentifier.equals?

*Thread Reply:* can you check what calls it?

+ + + +*Thread Reply:* unfortunately, some of the stack frames are truncated by JVM

+ + + +*Thread Reply:* maybe this has something to do with SymLink and the lombok implementation of .equals() ?

+ + + +*Thread Reply:* and then some sort of circular dependency

+ + + +*Thread Reply:* one possible place, looks like n^2 algorithm: https://github.com/OpenLineage/OpenLineage/blob/4ba93747e862e333267b46a57f02a09264[…]rk3/agent/lifecycle/plan/column/JdbcColumnLineageCollector.java

+*Thread Reply:* but is this a JDBC job?

+ + + +*Thread Reply:* let me see

+ + + +*Thread Reply:* I don't think so

+ + + +*Thread Reply:* it's not

+ + + +*Thread Reply:* ok, we don't use lang3 Pair a lot - it has to be in ColumnLevelLineageBuilder 🙂

+ + + +*Thread Reply:* yes.. I'm staring at that class for a while now

+ + + +*Thread Reply:* what's the rough size of the logical plan of the job?

+ + + +*Thread Reply:* I'm trying to understand whether we're looking at some infinite loop

+ + + +*Thread Reply:* or just something done very ineffiently

+ + + +*Thread Reply:* like every input being added in this manner: +``` public void addInput(ExprId exprId, DatasetIdentifier datasetIdentifier, String attributeName) { + inputs.computeIfAbsent(exprId, k -> new LinkedList<>());

+ +Pair<DatasetIdentifier, String> input = Pair.of(datasetIdentifier, attributeName);

+

+if (!inputs.get(exprId).contains(input)) {

+ inputs.get(exprId).add(input);

+}

+}``

+it's a candidate: it has to traverse the list returned frominputs` for every CLL dependency field added

*Thread Reply:* it looks like we're building size N list in N^2 time:

+inputs.stream()

+ .filter(i -> i instanceof InputDatasetFieldWithIdentifier)

+ .map(i -> (InputDatasetFieldWithIdentifier) i)

+ .forEach(

+ i ->

+ context

+ .getBuilder()

+ .addInput(

+ ExprId.apply(i.exprId().exprId()),

+ new DatasetIdentifier(

+ i.datasetIdentifier().getNamespace(), i.datasetIdentifier().getName()),

+ i.field()));

+🙂

*Thread Reply:* ah, this isn't even used now since it's for new extension-based spark collection

+ + + +*Thread Reply:* @Paweł Leszczyński this is most likely a future bug ⬆️

+ + + +*Thread Reply:* I think we're still doing it now anyway:

+``` private static void extractInternalInputs(

+ LogicalPlan node,

+ ColumnLevelLineageBuilder builder,

+ List

datasetIdentifiers.stream()

+ .forEach(

+ di -> {

+ ScalaConversionUtils.fromSeq(node.output()).stream()

+ .filter(attr -> attr instanceof AttributeReference)

+ .map(attr -> (AttributeReference) attr)

+ .collect(Collectors.toList())

+ .forEach(attr -> builder.addInput(attr.exprId(), di, attr.name()));

+ });

+}```

+ + + +*Thread Reply:* and that's linked list - must be pretty slow jumping all those pointers

+ + + +*Thread Reply:* maybe it's that simple 🙂 +https://github.com/OpenLineage/OpenLineage/commit/306778769ae10fa190f3fd0eff7a6482fc50f57f

+ + + +*Thread Reply:* There are some more funny places in CLL code, like we're iterating over list of schema fields and calling some function with name of that field :

+schema.getFields().stream()

+ .map(field -> Pair.of(field, getInputsUsedFor(field.getName())))

+then immediately iterate over it second time to get the field back from it's name:

+List<Pair<DatasetIdentifier, String>> getInputsUsedFor(String outputName) {

+ Optional<OpenLineage.SchemaDatasetFacetFields> outputField =

+ schema.getFields().stream()

+ .filter(field -> field.getName().equalsIgnoreCase(outputName))

+ .findAny();

*Thread Reply:* I think the time spent by the driver (5 hours) just on these methods smells like an infinite loop?

+ + + +*Thread Reply:* like, as inefficient as it may be, this is a lot of time

+ + + +*Thread Reply:* did it finish eventually?

+ + + +*Thread Reply:* yes... but.. I wonder if something killed it somewhere?

+ + + +*Thread Reply:* I mean, it can be something like 10000^3 loop 🙂

+ + + +*Thread Reply:* I couldn't find anything in the logs to indicate

+ + + +*Thread Reply:* and it has to do those pair comparisons

+ + + +*Thread Reply:* would be easier if we could see the general size of a plan of this job - if it's something really small then I'm probably wrong

+ + + +*Thread Reply:* but if there are 1000s of columns... anything can happen 🙂

+ + + +*Thread Reply:* yeah.. trying to find out. I don't have that facet enabled there, and I can't find the ol events in the logs (it's writing to console, and I think they got dropped)

+ + + +*Thread Reply:* DevNullTransport 🙂

+ + + +*Thread Reply:* I think this might be potentially really slow too https://github.com/OpenLineage/OpenLineage/blob/50afacdf731f810354be0880c5f1fd05a1[…]park/agent/lifecycle/plan/column/ColumnLevelLineageBuilder.java

+*Thread Reply:* generally speaking, we have a similar problem here like we had with Airflow integration

+ + + +*Thread Reply:* we are not holding up the job per se, but... we are holding up the spark application

+ + + +*Thread Reply:* do we have a way to be defensive about that somehow, shutdown hook from spark to our thread or something

+ + + +*Thread Reply:* there's no magic

+ + + +*Thread Reply:* circuit breaker with timeout does not work?

+ + + +*Thread Reply:* it would, but we don't turn that on by default

+ + + +*Thread Reply:* also, if we do, what should be our default values?

+ + + +*Thread Reply:* what would not hurt you if you enabled it, 30 seconds?

+ + + +*Thread Reply:* I guess we should aim much lower with the runtime

+ + + +*Thread Reply:* yeah, and make sure we emit metrics / logs when that happens

+ + + +*Thread Reply:* wait, our circuit breaker right now only supports cpu & memory

+ + + +*Thread Reply:* we would need to add a timeout one, right?

+ + + +*Thread Reply:* ah, yes

+ + + +*Thread Reply:* we've talked about it but it's not implemented yet https://github.com/OpenLineage/OpenLineage/blob/3dad978a3a76ea9bb709334f1526086f95[…]o/openlineage/client/circuitBreaker/ExecutorCircuitBreaker.java

+*Thread Reply:* and BTW, no abnormal CPU or memory usage?

+ + + +*Thread Reply:* nope, not at all

+ + + +*Thread Reply:* I mean, it's using 100% of one core 🙂

+ + + +*Thread Reply:* it's similar to what aniruth experienced. there's something that for some type of logical plans causes recursion alike behaviour. However, I don't think it's recursion bcz it's ending at some point. If we had DebugFacet we would be able to know which logical plan nodes are involved in this.

+ + + +*Thread Reply:* I'll try to get that for us

+ + + +*Thread Reply:* > If we had DebugFacet we would be able to know which logical plan nodes are involved in this. +if the event would not take 1GB 🙂

+ + + +*Thread Reply:* > it's similar to what aniruth experienced. there's something that for some type of logical plans causes recursion alike behaviour. However, I don't think it's recursion bcz it's ending at some point. If we had DebugFacet we would be able to know which logical plan nodes are involved in this. (edited) +what about my thesis that something is just extremely slow in column-level lineage code?

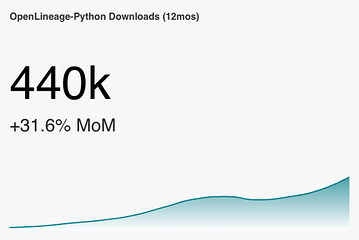

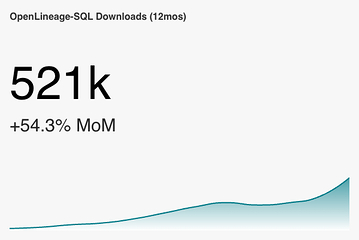

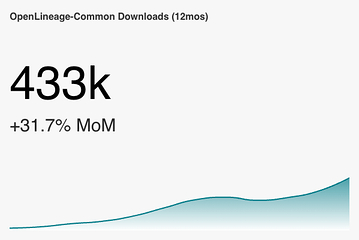

+ + + +Some adoption metrics from Sonatype and PyPI, visualized using Preset. In Preset, you can see the number for each month (but we're out of seats on the free tier there). The big number is the downloads for the last month (February in most cases).

+ + +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+ Good news. @Paweł Leszczyński - the memory leak fixes worked. Our streaming pipelines have run through the weekend without a single OOM crash.

+ + + +*Thread Reply:* @Damien Hawes Would you please point me the PR that fixes the issue?

+ + + +*Thread Reply:* This was the issue: https://github.com/OpenLineage/OpenLineage/issues/2561

+ +There were two PRs:

+ +*Thread Reply:* @Peter Huang ^

+ + + +*Thread Reply:* @Damien Hawes any other feedback for OL with streaming pipelines you have so far?

+ + + +*Thread Reply:* It generates a TON of data

+ + + +*Thread Reply:* There are some optimisations that could be made:

+ +job start -> stage submitted -> task started -> task ended -> stage complete -> job end cycle fires more frequently.*Thread Reply:* This has an impact on any backend using it, as the run id keeps changing. This means the parent suddenly has thousands of jobs as children.

+ + + +*Thread Reply:* Our biggest pipeline generates a new event cycle every 2 minutes.

+ + + +*Thread Reply:* "Too much data" is exactly what I thought 🙂 +The obvious potential issue with caching is the same issue we just fixed... potential memory leaks, and cache invalidation

+ + + +*Thread Reply:* > the run id keeps changing

+In this case, that's a bug. We'd still need some wrapping event for whole streaming job though, probably other than application start

*Thread Reply:* on the other topic, did those problems stop? https://github.com/OpenLineage/OpenLineage/issues/2513 +with https://github.com/OpenLineage/OpenLineage/pull/2535/files

+when talking about the naming scheme for datasets, would everyone here agree that we generally use: {scheme}://{authority}/{unique_name} ? where generally authority == namespace

*Thread Reply:* I think so, and if we don’t then we should

+ + + +*Thread Reply:* ~which brings me to the question why construct dataset name as such~ nvm

+ + + +*Thread Reply:* please feel free to chime in here too https://github.com/dbt-labs/dbt-core/issues/8725

+ + + +*Thread Reply:* > where generally authority == namespace (edited)

+{scheme}://{authority} is namespace

*Thread Reply:* agreed

+ + + +Is it the case that Open Lineage defines the general framework but doesn’t actually enforce push or pull-based implementations, it just so happens that the reference implementation (Marquez) uses push?

@@ -8043,7 +8047,7 @@*Thread Reply:*

*Thread Reply:*

*Thread Reply:*

@@ -9685,7 +9693,7 @@*Thread Reply:*

Build on main passed (edited)

@@ -12784,6 +12796,43 @@I added this configuration to my cluster :

@@ -12891,11 +12944,15 @@I receive this error message:

@@ -13097,11 +13154,15 @@*Thread Reply:*

@@ -13251,11 +13312,15 @@Now I have this:

@@ -13416,11 +13481,15 @@*Thread Reply:* Hi , @Luke Smith, thank you for your help, are you familiar with this error in azure databricks when you use OL?

@@ -13451,11 +13520,15 @@*Thread Reply:*

@@ -13508,11 +13581,15 @@*Thread Reply:* Successfully got a basic prefect flow working

@@ -22372,29 +22453,41 @@Hey there, I’m not sure why I’m getting below error, after I ran OPENLINEAGE_URL=<http://localhost:5000> dbt-ol run , although running this command dbt debug doesn’t show any error. Pls help.

*Thread Reply:* Actually i had to use venv that fixed above issue. However, i ran into another problem which is no jobs / datasets found in marquez:

*Thread Reply:*

@@ -24252,20 +24361,28 @@*Thread Reply:* oh got it, since its in default, i need to click on it and choose my dbt profile’s account name. thnx

@@ -24357,11 +24478,15 @@*Thread Reply:* May I know, why these highlighted ones dont have schema? FYI, I used sources in dbt.

@@ -24418,11 +24543,15 @@*Thread Reply:* I prepared this yaml file, not sure this is what u asked

@@ -27866,11 +27995,15 @@I have a dag that contains 2 tasks:

@@ -28832,11 +28965,15 @@It created 3 namespaces. One was the one that I point in the spark config property. The other 2 are the bucket that we are writing to (

I can see if i enter in one of the weird jobs generated this:

@@ -28963,11 +29108,15 @@*Thread Reply:* This job with no output is a symptom of the output not being understood. you should be able to see the facets for that job. There will be a spark_unknown facet with more information about the problem. If you put that into an issue with some more details about this job we should be able to help.

If I check the logs of marquez-web and marquez I can't see any error there

When I try to open the job fulfilments.execute_insert_into_hadoop_fs_relation_command I see this window:

*Thread Reply:* Here's what I mean:

@@ -31226,7 +31391,7 @@*Thread Reply:* This is an example Lineage event JSON I am sending.

*Thread Reply:* There are two types of failures: tests failed on stage model (relationships) and physical error in master model (no table with such name). The stage test node in Marquez does not show any indication of failures and dataset node indicates failure but without number of failed records or table name for persistent test storage. The failed master model shows in red but no details of failure. Master model tests were skipped because of model failure but UI reports "Complete".

@@ -35638,20 +35839,28 @@dbt test failures, to visualize better that error is happening, for example like that:

@@ -35823,11 +36032,15 @@ hello everyone , i'm learning Openlineage, I am trying to connect with airflow 2, is it possible? or that version is not yet released. this is currently throwing me airflow

@@ -36077,6 +36290,43 @@ +

+

+

+

+

+

+

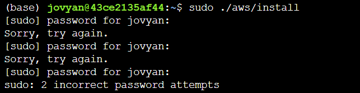

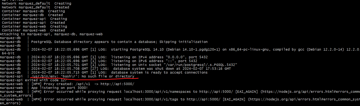

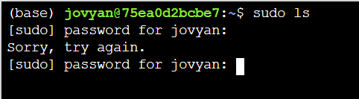

+ *Thread Reply:* It needs to show Docker Desktop is running :

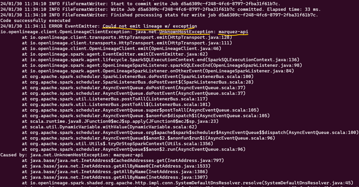

I've attached the logs and a screenshot of what I'm seeing the Spark UI. If you had a chance to take a look, it's a bit verbose but I'd appreciate a second pair of eyes on my analysis. Hopefully I got something wrong 😅

@@ -39983,11 +40253,15 @@*Thread Reply:* This is the one I wrote:

*Thread Reply:* however I can not fetch initial data when login into the endpoint

@@ -41681,11 +41959,15 @@@Kevin Mellott Hello Kevin, sorry to bother you again. I was finally able to configure Marquez in AWS using an ALB. Now I am receiving this error when calling the API

@@ -44042,11 +44328,15 @@Am i supposed to see this when I open marquez fro the first time on an empty database?

@@ -44433,11 +44723,15 @@Hi Everyone, Can someone please help me to debug this error ? Thank you very much all

@@ -49555,11 +49861,15 @@Hello everyone, I'm learning Openlineage, I finally achieved the connection between Airflow 2+ and Openlineage+Marquez. The issue is that I don't see nothing on Marquez. Do I need to modify current airflow operators?

@@ -49642,11 +49952,15 @@*Thread Reply:* Thanks, finally was my error .. I created a dummy dag to see if maybe it's an issue over the dag and now I can see something over Marquez

@@ -49824,7 +50142,7 @@happy to share the slides with you if you want 👍 here’s a PDF:

@@ -51028,11 +51350,15 @@Your periodical reminder that Github stars are one of those trivial things that make a significant difference for an OS project like ours. Have you starred us yet?

@@ -53756,11 +54082,15 @@*Thread Reply:*

@@ -53959,11 +54293,15 @@This is a similar setup as Michael had in the video.

@@ -54438,11 +54776,15 @@Hi~all, I have a question about lineage. I am now running airflow 2.3.1 and have started a latest marquez service by docker-compose. I found that using the example DAG of airflow can only see the job information, but not the lineage of the job. How can I configure it to see the lineage ?

@@ -57725,20 +58091,28 @@Hello all, after sending dbt openlineage events to Marquez, I am now looking to use the Marquez API to extract the lineage information. I am able to use python requests to call the Marquez API to get other information such as namespaces, datasets, etc., but I am a little bit confused about what I need to enter to get the lineage. I included screenshots for what the API reference shows regarding retrieving the lineage where it shows that a nodeId is required. However, this is where I seem to be having problems. It is not exactly clear where the nodeId needs to be set or what the nodeId needs to include. I would really appreciate any insights. Thank you!

@@ -57797,11 +58171,15 @@*Thread Reply:* You can do this in a few ways (that I can think of). First, by looking for a namespace, then querying for the datasets in that namespace:

@@ -57832,11 +58210,15 @@*Thread Reply:* Or you can search, if you know the name of the dataset:

@@ -60640,6 +61022,43 @@check this out folks - marklogic datahub flow lineage into OL/marquez with jobs and runs and more. i would guess this is a pretty narrow use case but it went together really smoothly and thought i'd share sometimes it's just cool to see what people are working on

@@ -64118,11 +64578,15 @@Hi all, I have been playing around with Marquez for a hackday. I have been able to get some lineage information loaded in (using the local docker version for now). I have been trying set the location (for the link) and description information for a job (the text saying "Nothing to show here") but I haven't been able to figure out how to do this using the /lineage api. Any help would be appreciated.

Putting together some internal training for OpenLineage and highlighting some of the areas that have been useful to me on my journey with OpenLineage. Many thanks to @Michael Collado, @Maciej Obuchowski, and @Paweł Leszczyński for the continued technical support and guidance.

@@ -65257,20 +65725,28 @@hi all, really appreciate if anyone could help. I have been trying to create a poc project with openlineage with dbt. attached will be the pip list of the openlineage packages that i have. However, when i run "dbt-ol"command, it prompted as öpen as file, instead of running as a command. the regular dbt run can be executed without issue. i would want i had done wrong or if any configuration that i have missed. Thanks a lot

@@ -65649,7 +66125,7 @@./gradlew :shared:spotlessApply && ./gradlew :app:spotlessApply && ./gradlew clean build test

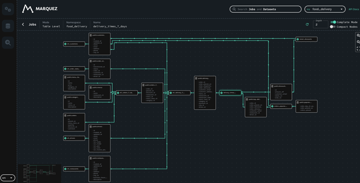

maybe another question for @Paweł Leszczyński: I was watching the Airflow summit talk that you and @Maciej Obuchowski did ( very nice! ). How is this exposed? I'm wondering if it shows up as an edge on the graph in Marquez? ( I guess it may be tracked as a parent run and if so probably does not show on the graph directly at this time? )

@@ -66869,11 +67349,15 @@*Thread Reply:*

@@ -68877,11 +69361,15 @@*Thread Reply:* After I send COMPLETE event with the same information I can see the dataset.

In this example I've added my-test-input on START and my-test-input2 on COMPLETE :

Here is the Marquez UI

@@ -72430,11 +72926,15 @@*Thread Reply:*

@@ -77177,11 +77677,15 @@*Thread Reply:* Apparently the value is hard coded in the code somewhere that I couldn't figure out but at-least learnt that in my Mac where this port 5000 is being held up can be freed by following the below simple step.

@@ -84818,11 +85322,15 @@But if I am not in a virtual environment, it installs the packages in my PYTHONPATH. You might try this to see if the dbt-ol script can be found in one of the directories in sys.path.

*Thread Reply:* this can help you verify that your PYTHONPATH and PATH are correct - installing an unrelated python command-line tool and seeing if you can execute it:

*Thread Reply:*

@@ -93252,11 +93768,15 @@Hi Team, I’m seeing creating data source, dataset API’s marked as deprecated . Can anyone point me how to create datasets via API calls?

@@ -94211,11 +94731,15 @@Is it possible to add column level lineage via api? Let's say I have fields A,B,C from my-input, and A,B from my-output, and B,C from my-output-s3. I want to see, filter, or query by the column name.

@@ -97313,11 +97837,15 @@23/04/20 10:00:15 INFO ConsoleTransport: {"eventType":"START","eventTime":"2023-04-20T10:00:15.085Z","run":{"runId":"ef4f46d1-d13a-420a-87c3-19fbf6ffa231","facets":{"spark.logicalPlan":{"producer":"https://github.com/OpenLineage/OpenLineage/tree/0.22.0/integration/spark","schemaURL":"https://openlineage.io/spec/1-0-5/OpenLineage.json#/$defs/RunFacet","plan":[{"class":"org.apache.spark.sql.catalyst.plans.logical.CreateTableAsSelect","num-children":2,"name":0,"partitioning":[],"query":1,"tableSpec":null,"writeOptions":null,"ignoreIfExists":false},{"class":"org.apache.spark.sql.catalyst.analysis.ResolvedTableName","num-children":0,"catalog":null,"ident":null},{"class":"org.apache.spark.sql.catalyst.plans.logical.Project","num-children":1,"projectList":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num_children":0,"name":"workorderid","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-cl

@@ -99066,11 +99594,15 @@Hi, I'm new to Open data lineage and I'm trying to connect snowflake database with marquez using airflow and getting the error in etl_openlineage while running the airflow dag on local ubuntu environment and unable to see the marquez UI once it etl_openlineage has ran completed as success.

*Thread Reply:* What's the extract_openlineage.py file? Looks like your code?

*Thread Reply:* This is my log in airflow, can you please prvide more info over it.

@@ -99735,20 +100275,28 @@*Thread Reply:*

@@ -101255,11 +101811,15 @@I have configured Open lineage with databricks and it is sending events to Marquez as expected. I have a notebook which joins 3 tables and write the result data frame to an azure adls location. Each time I run the notebook manually, it creates two start events and two complete events for one run as shown in the screenshot. Is this something expected or I am missing something?

@@ -102859,11 +103423,15 @@I have a usecase where we are connecting to Azure sql database from databricks to extract, transform and load data to delta tables. I could see the lineage is getting build, but there is no column level lineage through its 1:1 mapping from source. Could you please check and update on this.

@@ -102977,7 +103545,7 @@*Thread Reply:* Here is the code we use.

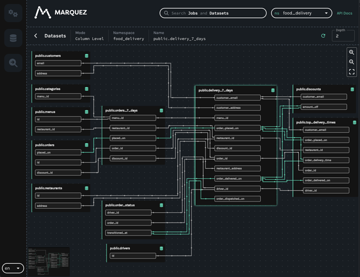

@Paweł Leszczyński @Michael Robinson

I can see my job there but when i click on the job when its supposed to show lineage, its just an empty screen

@@ -108535,11 +109107,15 @@*Thread Reply:* ohh but if i try using the console output, it throws ClientProtocolError

@@ -108596,11 +109172,15 @@*Thread Reply:* this is the dev console in browser

@@ -108831,11 +109411,15 @@*Thread Reply:* marquez didnt get updated

@@ -109339,6 +109923,43 @@ +

+

+

+

+

+

+

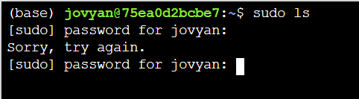

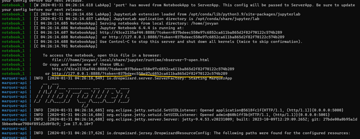

+ *Thread Reply:* @Michael Robinson When we follow the documentation without changing anything and run sudo ./docker/up.sh we are seeing following errors:

@@ -110112,11 +110741,15 @@*Thread Reply:* So, I edited up.sh file and modified docker compose command by removing --log-level flag and ran sudo ./docker/up.sh and found following errors:

@@ -110147,11 +110780,15 @@*Thread Reply:* Then I copied .env.example to .env since compose needs .env file

@@ -110182,11 +110819,15 @@*Thread Reply:* I got this error:

@@ -110273,11 +110914,15 @@*Thread Reply:* @Michael Robinson Then it kind of worked but seeing following errors:

@@ -110308,11 +110953,15 @@*Thread Reply:*

@@ -110656,11 +111305,15 @@*Thread Reply:*

@@ -111536,7 +112189,7 @@*Thread Reply:* This is the event generated for above query.

this is event for view for which no lineage is being generated

Hi, I am running a job in Marquez with 180 rows of metadata but it is running for more than an hour. Is there a way to check the log on Marquez? Below is the screenshot of the job:

@@ -116278,11 +116943,15 @@*Thread Reply:* Also, yes, we have an even viewer that allows you to query the raw OL events

@@ -116339,7 +117008,7 @@*Thread Reply:*

I can now see this

@@ -117487,11 +118164,15 @@*Thread Reply:* but when i click on the job i then get this

@@ -117548,11 +118229,15 @@*Thread Reply:* @George Polychronopoulos Hi, I am facing the same issue. After adding spark conf and using the docker run command, marquez is still showing empty. Do I need to change something in the run command?

@@ -119539,11 +120224,15 @@ +

+

Expected. vs Actual.

The OL-spark version is matching the Spark version? Is there a known issues with the Spark / OL versions ?

@@ -124345,20 +125100,28 @@*Thread Reply:* I assume the problem is somewhere there, not on the level of facet definition, since SchemaDatasetFacet looks pretty much the same and it works

*Thread Reply:*

@@ -125192,11 +125967,15 @@*Thread Reply:* I think the code here filters out those string values in the list

@@ -125426,11 +126205,15 @@ +

+  @@ -126744,6 +127527,130 @@

@@ -126744,6 +127527,130 @@ *Thread Reply:* @Paweł Leszczyński @Maciej Obuchowski +can you please approve this CI to run integration tests? +https://app.circleci.com/pipelines/github/OpenLineage/OpenLineage/9497/workflows/4a20dc95-d5d1-4ad7-967c-edb6e2538820

+ + + +*Thread Reply:* @Paweł Leszczyński +only 2 spark version are sending empty +input and output +for both START and COMPLETE event

+ +++ + + +• 3.4.2 + • 3.5.0 + i can look into the above , if you guide me a bit on how to ? + should i open a new ticket for it? + please suggest how to proceed?

+

*Thread Reply:* this integration test case lead to finding of the above bug for spark 3.4.2 and 3.5.0 +will that be a blocker to merge this test case? +@Paweł Leszczyński @Maciej Obuchowski

+ + + +*Thread Reply:* @Paweł Leszczyński @Maciej Obuchowski +any direction on the above blocker will be helpful.

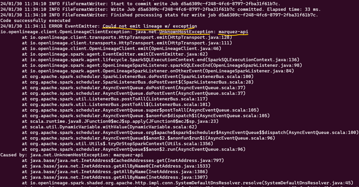

+ + + +I was doing this a second ago and this ended up with Caused by: java.lang.ClassNotFoundException: io.openlineage.spark.agent.OpenLineageSparkListener not found in com.databricks.backend.daemon.driver.ClassLoaders$LibraryClassLoader@1609ed55

*Thread Reply:* Can you please share with me your json conf for the cluster ?

@@ -128901,11 +129816,15 @@*Thread Reply:* It's because in mu build file I have

@@ -128936,11 +129855,15 @@*Thread Reply:* and the one that was copied is

@@ -132181,20 +133104,28 @@Hello, I'm currently in the process of following the instructions outlined in the provided getting started guide at https://openlineage.io/getting-started/. However, I've encountered a problem while attempting to complete *Step 1* of the guide. Unfortunately, I'm encountering an internal server error at this stage. I did manage to successfully run Marquez, but it appears that there might be an issue that needs to be addressed. I have attached screen shots.

@@ -132251,11 +133182,15 @@*Thread Reply:* @Jakub Dardziński 5000 port is not taken by any other application. The logs show some errors but I am not sure what is the issue here.

@@ -134980,11 +135915,15 @@*Thread Reply:* This is the error message:

@@ -135041,11 +135980,15 @@I am trying to run Google Cloud Composer where i have added the openlineage-airflow pypi packagae as a dependency and have added the env OPENLINEAGEEXTRACTORS to point to my custom extractor. I have added a folder by name dependencies and inside that i have placed my extractor file, and the path given to OPENLINEAGEEXTRACTORS is dependencies.<filename>.<extractorclass_name>…still it fails with the exception saying No module named ‘dependencies’. Can anyone kindly help me out on correcting my mistake

@@ -135365,11 +136308,15 @@*Thread Reply:*

@@ -135427,11 +136374,15 @@*Thread Reply:*

@@ -135488,11 +136439,15 @@*Thread Reply:* https://openlineage.slack.com/files/U05QL7LN2GH/F05SUDUQEDN/screenshot_2023-09-13_at_5.31.22_pm.png

@@ -135679,7 +136634,7 @@*Thread Reply:* these are the worker pod logs…where there is no log of openlineageplugin

*Thread Reply:* this is one of the experimentation that i have did, but then i reverted it back to keeping it to dependencies.bigqueryinsertjobextractor.BigQueryInsertJobExtractor…where dependencies is a module i have created inside my dags folder

@@ -135856,11 +136815,15 @@*Thread Reply:* https://openlineage.slack.com/files/U05QL7LN2GH/F05RM6EV6DV/screenshot_2023-09-13_at_12.38.55_am.png

@@ -135891,11 +136854,15 @@*Thread Reply:* these are the logs of the triggerer pod specifically

@@ -135978,11 +136945,15 @@*Thread Reply:* these are the logs of the worker pod at startup, where it does not complain of the plugin like in triggerer, but when tasks are run on this worker…somehow it is not picking up the extractor for the operator that i have written it for

@@ -136272,11 +137243,15 @@*Thread Reply:* have changed the dags folder where i have added the init file as you suggested and then have updated the OPENLINEAGEEXTRACTORS to bigqueryinsertjob_extractor.BigQueryInsertJobExtractor…still the same thing

@@ -136502,11 +137477,15 @@*Thread Reply:* I’ve done experiment, that’s how gcs looks like

@@ -136537,11 +137516,15 @@*Thread Reply:* and env vars

@@ -137171,7 +138154,7 @@*Thread Reply:*

I am attaching the log4j, there is no openlineagecontext

*Thread Reply:* A few more pics:

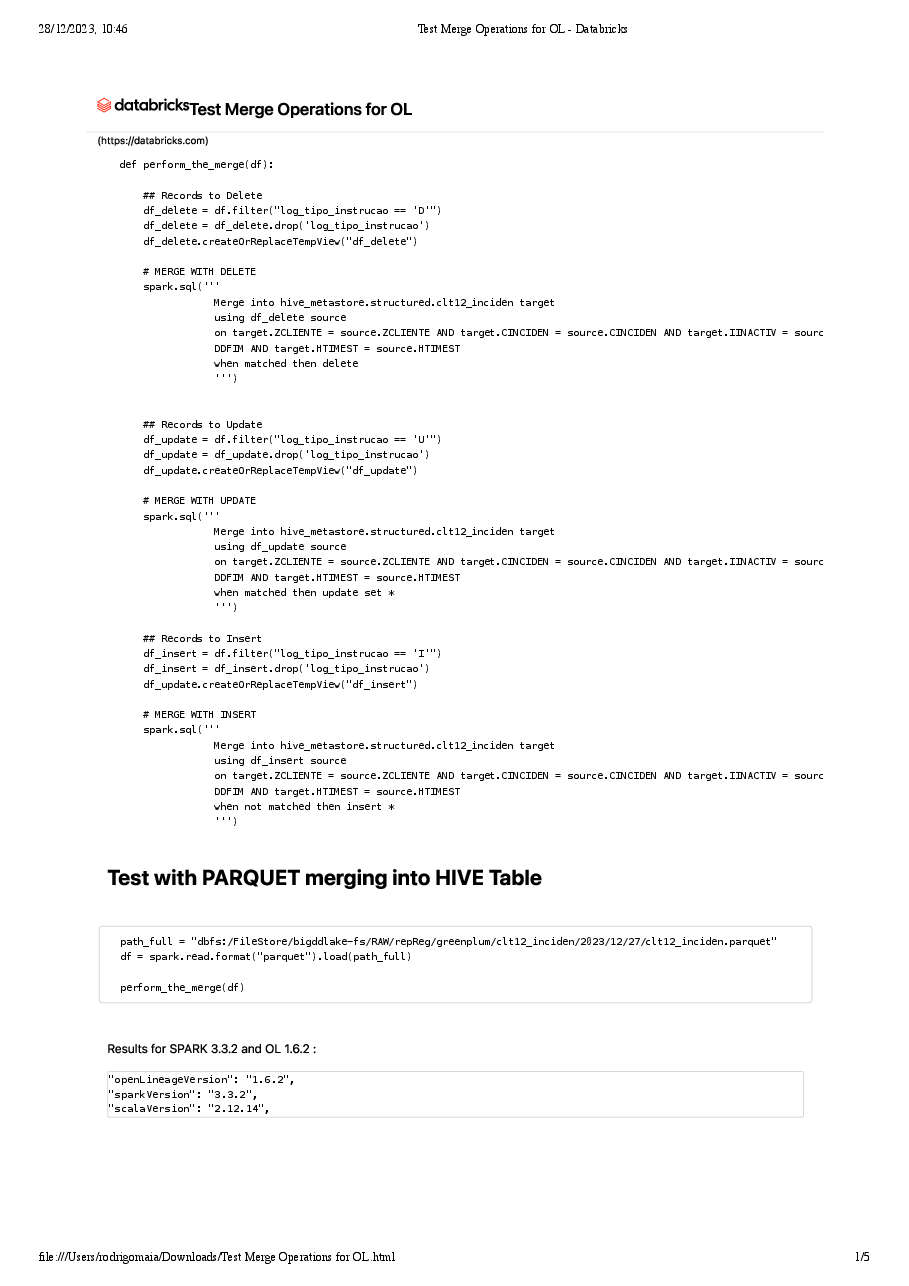

@@ -143258,16 +144273,20 @@@here I am trying out the openlineage integration of spark on databricks. There is no event getting emitted from Openlineage, I see logs saying OpenLineage Event Skipped. I am attaching the Notebook that i am trying to run and the cluster logs. Kindly can someone help me on this

*Thread Reply:* @Paweł Leszczyński this is what I am getting

@@ -144858,7 +145881,7 @@*Thread Reply:* attaching the html

*Thread Reply:* @Paweł Leszczyński you are right. This is what we are doing as well, combining events with the same runId to process the information on our backend. But even so, there are several runIds without this information. I went through these events to have a better view of what was happening. As you can see from 7 runIds, only 3 were showing the "environment-properties" attribute. Some condition is not being met here, or maybe it is what @Jason Yip suspects and there's some sort of filtering of unnecessary events

@@ -146215,11 +147242,15 @@*Thread Reply:* In docker, marquez-api image is not running and exiting with the exit code 127.

@@ -146765,11 +147796,15 @@Im upgrading the version from openlineage-airflow==0.24.0 to openlineage-airflow 1.4.1 but im seeing the following error, any help is appreciated

@@ -147274,11 +148309,15 @@*Thread Reply:* I see the difference of calling in these 2 versions, current versions checks if Airflow is >2.6 then directly runs on_running but earlier version was running on separate thread. IS this what's raising this exception?

@@ -148593,7 +149632,7 @@*Thread Reply:*

@Paweł Leszczyński I tested 1.5.0, it works great now, but the environment facets is gone in START... which I very much want it.. any thoughts?

@Paweł Leszczyński I went back to 1.4.1, output does show adls location. But environment facet is gone in 1.4.1. It shows up in 1.5.0 but namespace is back to dbfs....

like ( file_name, size, modification time, creation time )

@@ -154451,11 +155494,15 @@execute_spark_script(1, "/home/haneefa/airflow/dags/saved_files/")

@@ -155287,12 +156334,12 @@ I was referring to fluentd openlineage proxy which lets users copy the event and send it to multiple backend. Fluentd has a list of out-of-the box output plugins containing BigQuery, S3, Redshift and others (https://www.fluentd.org/dataoutputs)

+

+  @@ -157316,7 +158363,7 @@

@@ -157316,7 +158363,7 @@ *Thread Reply:* This text file contains a total of 10-11 events, including the start and completion events of one of my notebook runs. The process is simply reading from a Hive location and performing a full load to another Hive location.

+

+  @@ -161188,12 +162235,12 @@

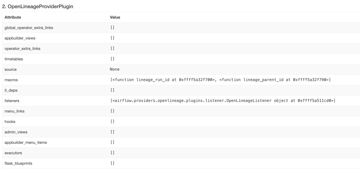

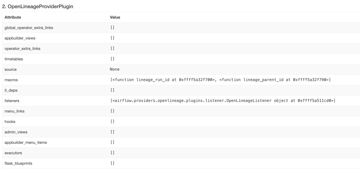

@@ -161188,12 +162235,12 @@ *Thread Reply:* in Admin > Plugins can you see whether you have OpenLineageProviderPlugin and if so, are there listeners?

+

+  @@ -161292,7 +162339,7 @@

@@ -161292,7 +162339,7 @@ *Thread Reply:* Dont

*Thread Reply:*

+

+  @@ -163600,7 +164680,7 @@

@@ -163600,7 +164680,7 @@ Do we have the functionality to search on the lineage we are getting?

+

+ *Thread Reply:*

any suggestions on naming for Graph API sources from outlook? I pull a lot of data from email attachments with Airflow. generally I am passing a resource (email address), the mailbox, and subfolder. from there I list messages and find attachments

+

+ Hello team I see the following issue when i install apache-airflow-providers-openlineage==1.4.0

+

+  +

+  @@ -168984,12 +170064,12 @@

@@ -168984,12 +170064,12 @@  +

+  @@ -169177,6 +170257,32 @@